Fully integrative system

Hardware Architecture

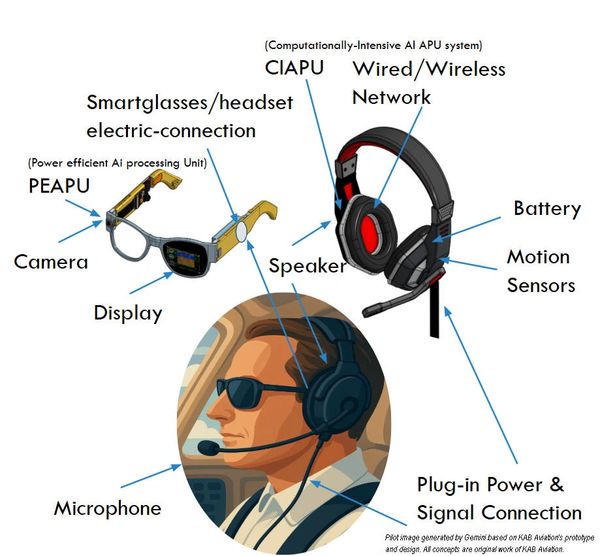

The smartglasses hardware design integrates a lightweight, eye-level display positioned directly in front of the user’s eye, paired with an onboard camera and microphone to enable real-time visual and audio capture. A Power-Efficient AI Processing Unit (PEAPU) handles continuous, low-latency edge inference, while a Computationally-Intensive AI APU (CIAPU) supports advanced AI workloads when higher performance is required. Our system incorporates motion sensors and active noise-cancellation microphones and speakers, while power is supplied through an integrated battery with optional plug-in power support.

Software Achitecture

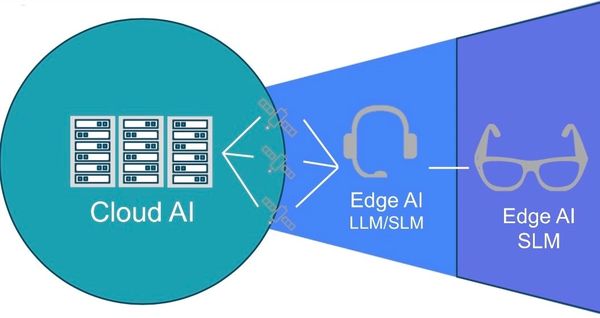

Our AI CoPilot software operates on three levels. First, a power-efficient Edge AI for the smart glasses aims to provide actionable guidance and display real-time data by integrating sensors for fatigue and motion alerts. Second, Edge AI is also operated in our headset system; it uses a compact language model for instantaneous decision support. Lastly, Cloud AI uses the latest technology hybrid multimodal models for deep analysis and predictive insights, combining deterministic elements for consistency and end-to-end/generative AI for complex emergency situations.

Wearable Display

Our waveguide technology is directly embedded into the lens to project images into the wearer's line of sight. By utilizing LCoS technology and LEDs, this design keeps the glasses lightweight and affordable while maximizing battery life.

Reference:

https://kguttag.com/2025/10/30/meta-ray-ban-display-part-1-lumus-wave

Technology behind our AI CoPilot

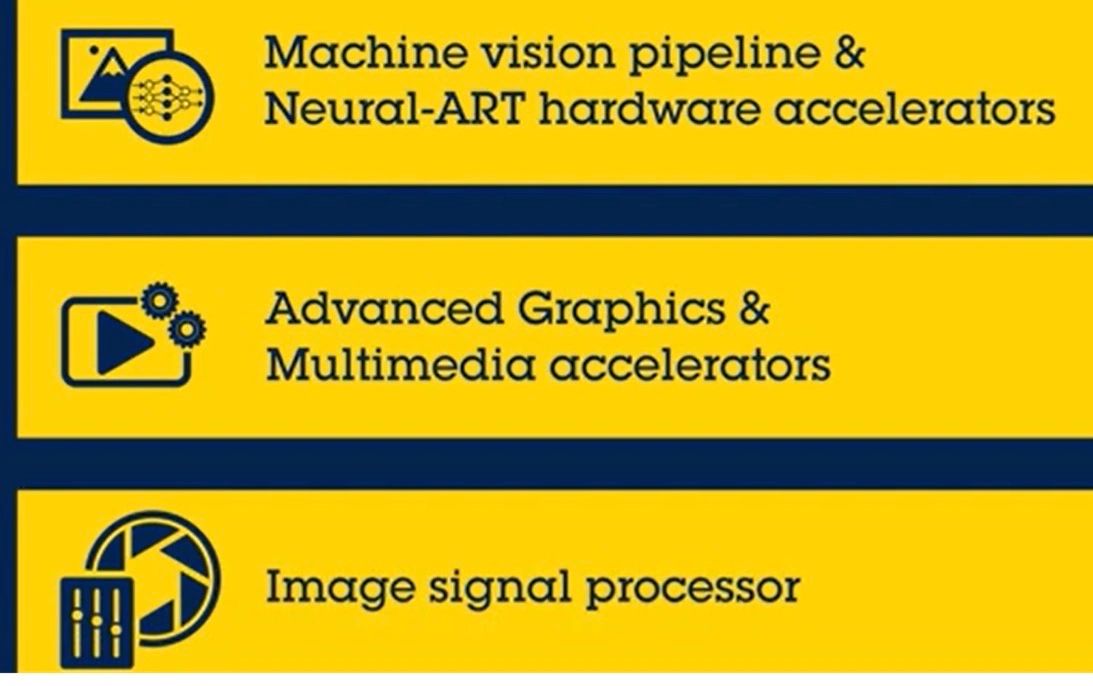

Powered by the STM32N6 platform, our system is built on the Arm® Cortex®-M55 processor with an integrated Neural Processing Unit (NPU), enabling efficient on-device AI acceleration. Our technology supports advanced computer vision and image signal processing, allowing real-time visual analysis directly.

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.